I’m an assistant professor in Westlake University (西湖大学) (Department of AI in School of Engineering), PI of AI for Scientific Simulation and Discovery Lab(人工智能与科学仿真发现实验室). I did my postdoc at Stanford Computer Science (2020 to mid-2023), working with professor Jure Leskovec, got my Ph.D. in MIT in 2019, and B.S. in Peking University in 2012. I’m now actively recruiting postdocs, PhD students and interns. Toward my long-term vision of accelerating scientific simulation, control, design, and discovery with machine learning (AI + Science), my research interests are:

(1) Core AI: Develop generative AI methods based on diffusion/flow matching and next-generation generative models; create self-evolving AI architectures. Develop generalist AI scientists and AI engineers.

(2) AI for Physical Sciences: Develop novel generative AI approaches for scientific simulation, control, design, and scientific discovery in complex physical systems such as fluids and plasmas. Develop underwater embodied intelligence.

(3) AI for Life Science: Develop AI virtual cells and decode the evolutionary mechanisms and intrinsic logic of living systems.

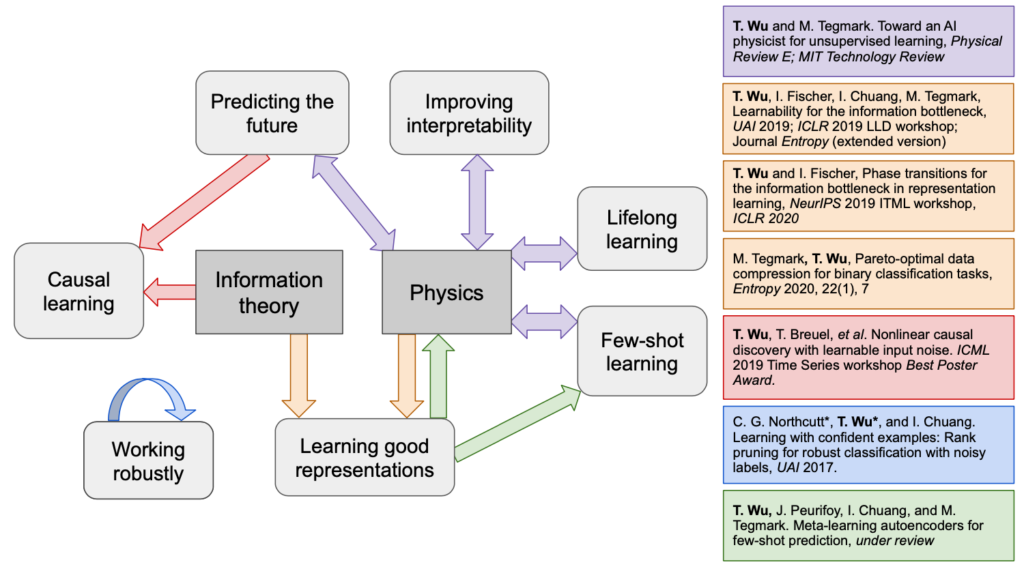

I obtained my PhD from MIT (thesis: Intelligence, physics and information – the tradeoff between accuracy and simplicity in machine learning), where I worked on AI for Physics and Physics for AI, with Prof. Isaac Chuang and Prof. Max Tegmark. I also joyfully interned at Google AI and NVIDIA Research.

Group Homepage: AI for Scientific Simulation and Discovery Lab(人工智能与科学仿真发现实验室)

Contact: wutailin@westlake.edu.cn

Google Scholar | Twitter | GitHub | 小红书:吴泰霖Talent

Group Github | University Homepage

News:

- 2025/11 Big congrats to my co-advised PhD student Haodong Feng who will join Zhongguancun College as researcher 祝贺我联合指导的博士生冯浩东将到中关村学院担任研究员(博导)!

- 2025/10 We release BuildArena, where LLM agents design, build, and test rockets, cars, and bridges in a physics simulator with a single sentence! With BuildArena, AIs turn words into designs, blueprints, and finally, 3D mechanisms tested in real-time physics! [X][project][paper][MIT Technology Review]

- 2025/09 Big congrats to my postdoc Long Wei who will join Fudan University as an assistant professor! 祝贺我的博后魏龙入职复旦大学担任助理教授(博导)!

- 2025/09 I serve as an area chair for ICLR 2026

- 2025/06 I serve as an area chair for NeurIPS 2025

- 2025/05 Three papers accepted at ICML 2025, one as spotlight [tweet]

- 2025/03 Published review paper: 人工智能加速科学仿真、设计、控制和发现 in Chinese Journal of Computational Physics《计算物理》

- 2025/03 My postdoc Long Wei is awarded 2024 Outstanding Postdoc of Westlake University

- 2025/03 Our CL-DiffPhyCon paper is nominated Outstanding Youth Paper Award at China Embodied AI Conference (CEAI 2025)

- 2025/01 Three papers accepted at ICLR 2025

- 2024/12 Co-organize NeurIPS 2024 Safe Generative AI Workshop

- 2024/11 Featured in journal Matter by Cell Press: 35 challenges in materials science being tackled by PIs under 35(ish) in 2024 [paper]

- 2024/05 ICLR 2024 Spotlight for our paper “Compositional Generative Inverse Design”.

- 2023/11 麻省理工科技评论报道:西湖大学吴泰霖:用AI助力科学仿真和发现,可控权衡预测精度和计算效率

- 2023/05 ICLR 2023 Spotlight for our paper “Learning Controllable Adaptive Simulation for Multi-resolution Physics”.

- I have been organizing a bi-weekly Reading Group on Simulation and Inverse Design, with >20 participants (Ph.Ds, postdocs, assistant professor) from CS, EE, ME, and Energy departments from Stanford, MIT, Westlake University, and Caltech. Each time we spend 1h discussing a relevant paper deeply (Tuesday 7-8pm PT, Wed 11am Beijing time). Contact me if you want to participate!

- Invited talk at Lawrence Livermore National Laboratory (LLNL)’s DDPS seminar, “Learning to accelerate large-scale physical simulations” [video].

- Guest lecture at Caltech CS159, “AI Physicist and Machine Learning for Simulations”[slides]

- Organized “Deep Learning for Simulation” workshop in ICLR 2021

- Our paper Toward an AI Physicist for Unsupervised Learning published at Physical Review E as part of a PRE Spotlight on Machine Learning in Physics.

- Our paper Graph Information Bottleneck was featured in Synced AI Technology & Industry Review (机器之心).

- Best Poster Award at ICML Time Series Workshop

- MIT Technology Review and MotherBoard featured our work Toward an AI Physicist for Unsupervised Learning.

Publications:

RealPDEBench: A Benchmark for Complex Physical Systems with Real-World Data

[project page][arXiv][code]

Peiyan Hu*, Haodong Feng*, Hongyuan Liu*, Tongtong Yan, Wenhao Deng, Tianrun Gao, Rong Zheng, Haoren Zheng, Chenglei Yu, Chuanrui Wang, Kaiwen Li, Zhi-Ming Ma, Dezhi Zhou, Xingcai Lu, Dixia Fan, Tailin Wu†

TL;DR: [ML for simulation] We introduce the first benchmark for complex physical systems with paired real-world data and simulated data, and explore how to bridge simulated and real-world data.

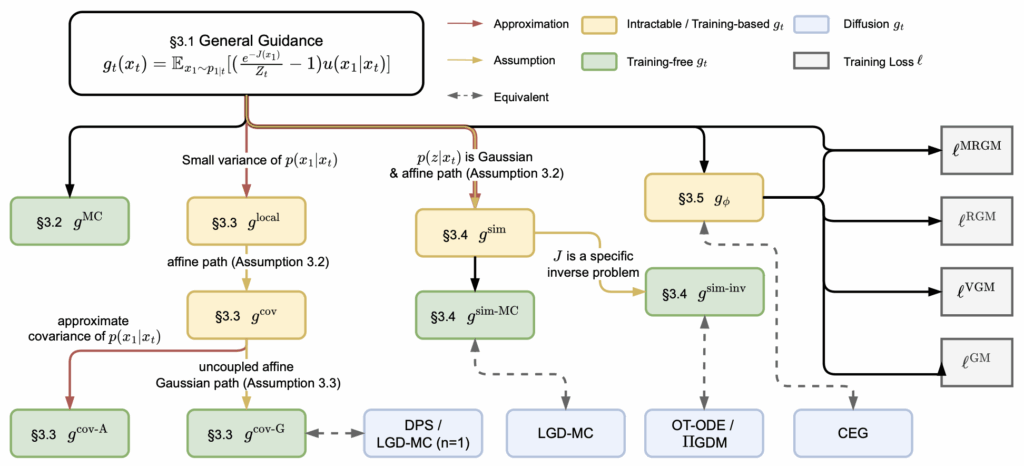

On the guidance of flow matching

Ruiqi Feng, Chenglei Yu*, Wenhao Deng*, Peiyan Hu, Tailin Wu†

ICML 2025 spotlight

[paper][arXiv][code]

TL;DR: [Generative model] We introduce the first framework for general flow matching guidance, from which new guidance methods are derived and many classical guidance methods are covered as special cases.

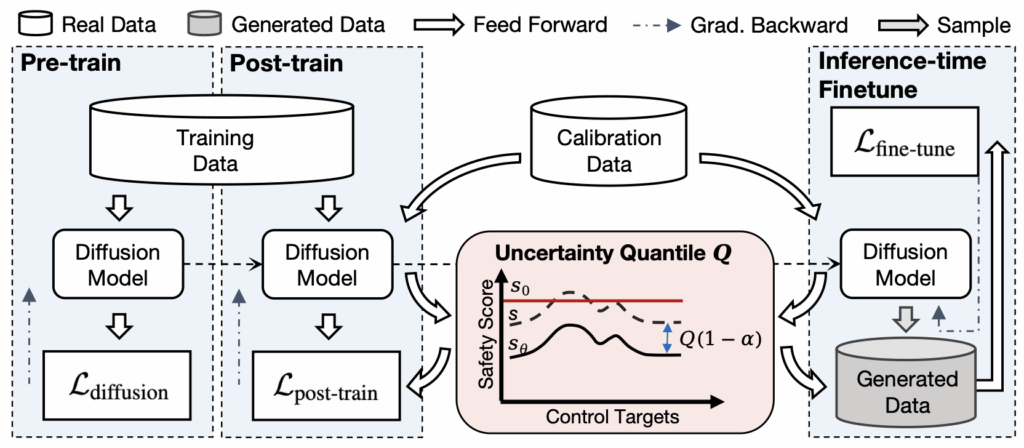

From Uncertain to Safe: Conformal Fine-Tuning of Diffusion Models for Safe PDE Control

Peiyan Hu*, Xiaowei Qian*, Wenhao Deng, Rui Wang, Haodong Feng, Ruiqi Feng, Tao Zhang, Long Wei, Yue Wang, Zhi-Ming Ma, Tailin Wu†

ICML 2025

[paper][arXiv][code]

TL;DR: [ML for physical control] We propose safe diffusion control with provable guarantee, by using conformal prediction for uncertainty quantification and enforcing safety with posttraining and finetuning.

M2PDE: Compositional Generative Multiphysics and Multi-component PDE Simulation

Tao Zhang, Zhenhai Liu, Feipeng Qi, Yongjun Jiao†, Tailin Wu†

ICML 2025

[paper][arXiv][code]

TL;DR: [ML for simulation] We introduce a diffusion-based approach for multiphysics and multi-component simulations. It can learn from decoupled training data and predict coupled multiphysics and multi-component simulations.

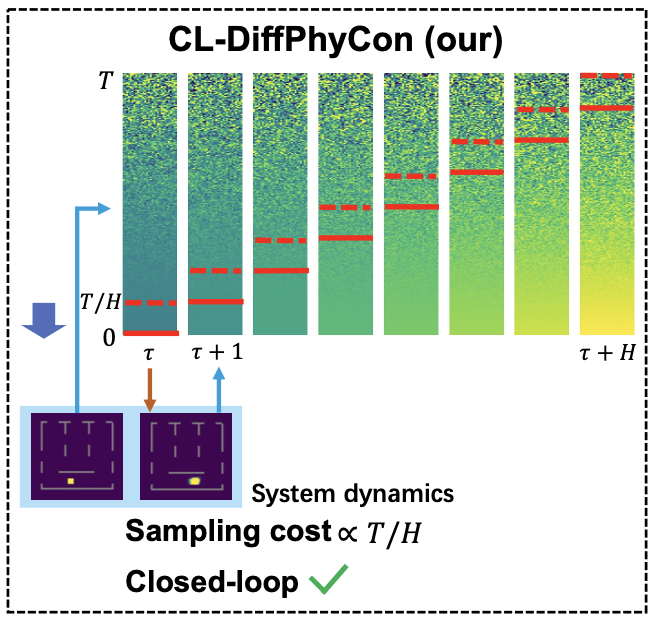

CL-DiffPhyCon: Closed-loop Diffusion Control of Complex Physical Systems

Long Wei*, Haodong Feng*, Yuchen Yang, Ruiqi Feng, Peiyan Hu, Xiang Zheng, Tao Zhang, Dixia Fan, Tailin Wu†

ICLR 2025

Nominated Outstanding Youth Paper Award at China Embodied AI Conference (CEAI 2025)

[paper][arXiv][code]

TL;DR: [ML for physical control] We propose a diffusion method with an asynchronous denoising schedule for physical systems control tasks. It achieves closed-loop control with significant speedup of sampling efficiency.

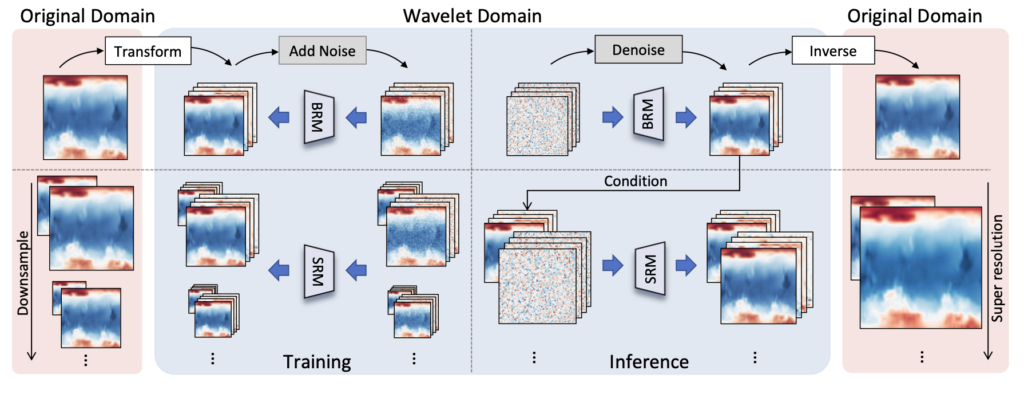

Wavelet Diffusion Neural Operator

Peiyan Hu*, Rui Wang*, Xiang Zheng, Tao Zhang, Haodong Feng, Ruiqi Feng, Long Wei, Yue Wang, Zhi-Ming Ma, Tailin Wu†

ICLR 2025

[paper][arXiv][code]

TL;DR: [ML for simulation & control] We propose Wavelet Diffusion Neural Operator (WDNO), a novel method for generative PDE simulation and control, to address diffusion models’ challenges of modeling system states with abrupt changes and generalizing to higher resolutions.

DiffPhyCon: A Generative Approach to Control Complex Physical Systems

Long Wei*, Peiyan Hu*, Ruiqi Feng*, Haodong Feng, Yixuan Du, Tao Zhang, Rui Wang, Yue Wang, Zhi-Ming Ma, Tailin Wu†

NeurIPS 2024; Oral at ICLR 2024 AI4PDE workshop

[paper][arXiv][project page][tweet][code]

TL;DR: [ML for physical control] We introduce DiffPhyCon, a novel method for controlling complex physical systems using diffusion generative models, by minimizing the learned generative energy function and specified objective

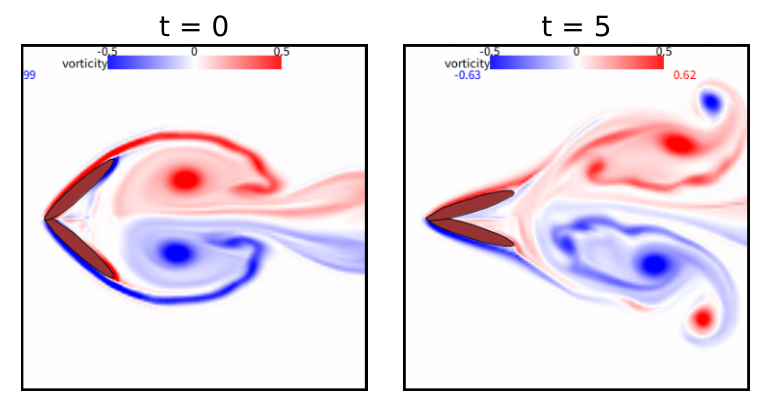

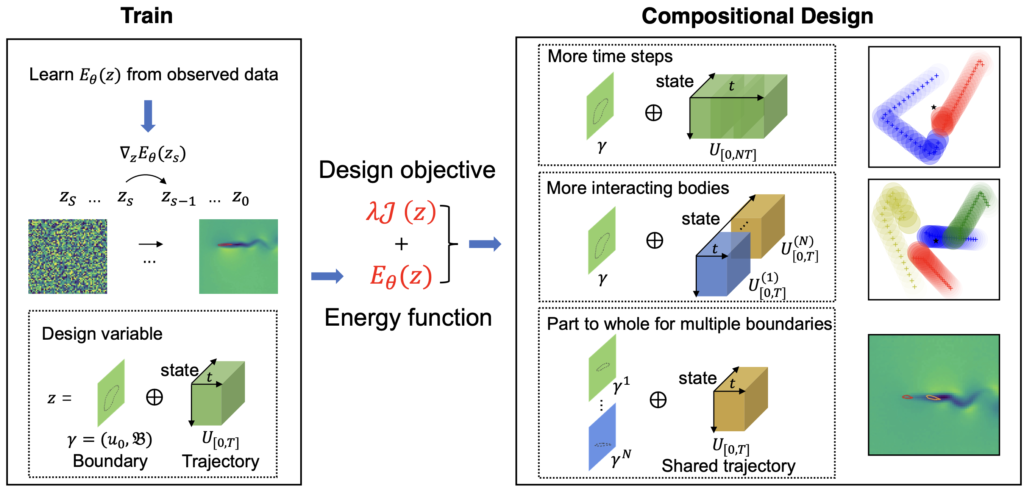

Compositional Generative Inverse Design

Tailin Wu*†, Takashi Maruyama*, Long Wei*, Tao Zhang*, Yilun Du*, Gianluca Iaccarino, Jure Leskovec

ICLR 2024 Spotlight

[paper][arXiv][code][project page][slides]

TL;DR: [ML for physical design] We introduce a CinDM method that uses compositional generative models to design boundaries and initial states significantly more complex than seen in training for physical simulations

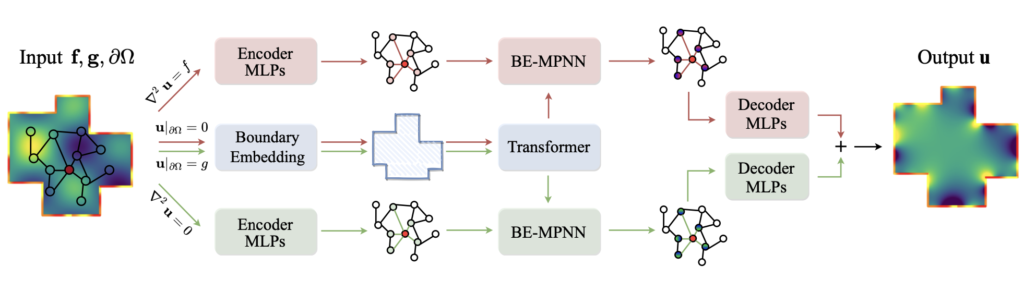

BENO: Boundary-embedded Neural Operators for Elliptic PDEs

Haixin Wang*, Jiaxin Li*, Anubhav Dwivedi, Kentaro Hara, Tailin Wu†

ICLR 2024; Oral at NeurIPS 2023 AI4Science workshop

[paper][arXiv][project page][code]

TL;DR: [ML for simulation] We introduce a boundary-embedded neural operator that incorporates complex boundary shape and inhomogeneous boundary values into the solving of Elliptic PDEs

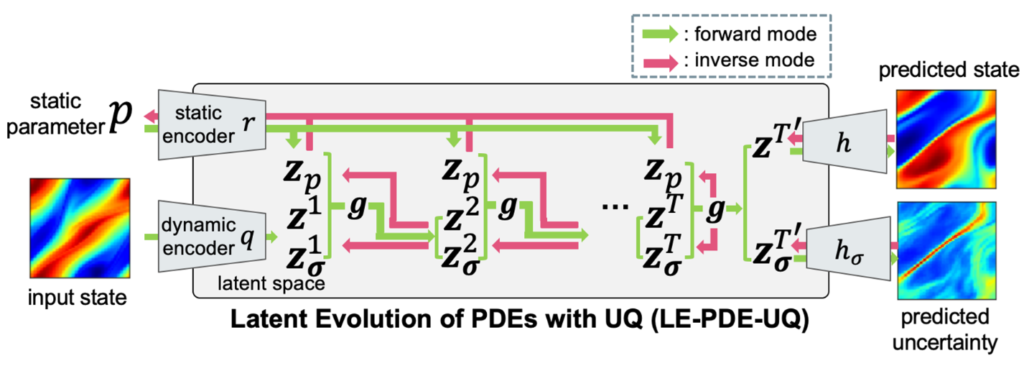

Uncertainty Quantification for Forward and Inverse Problems of PDEs via Latent Global Evolution

Tailin Wu*, Willie Neiswanger*, Hongtao Zheng*, Stefano Ermon, Jure Leskovec

[paper][arXiv][code]

AAAI 2024 Oral (top 10% of accepted papers)

TL;DR: [ML for simulation] We introduced uncertainty quantification method for forward simulation and inverse problems of PDEs using latent uncertainty propagation.

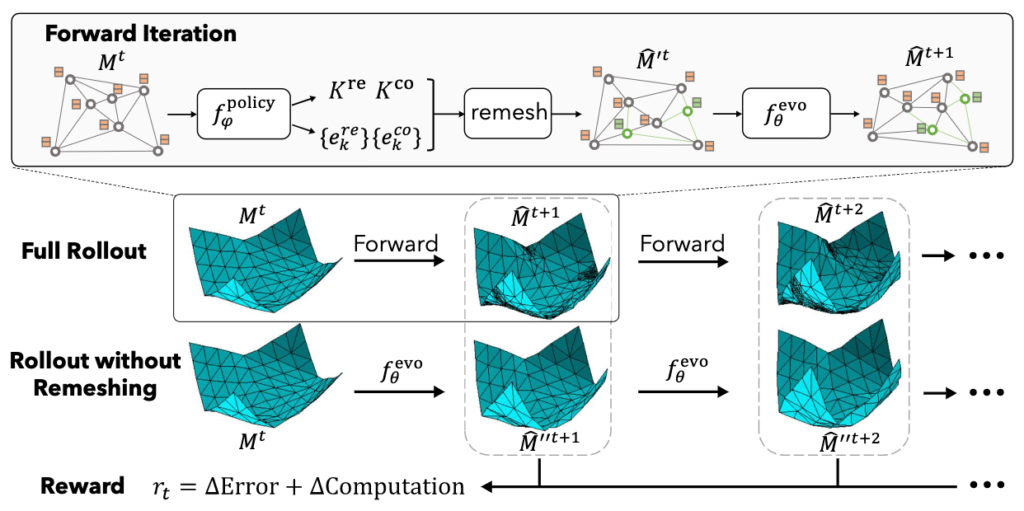

Learning Controllable Adaptive Simulation for Multi-resolution Physics

Tailin Wu* , Takashi Maruyama*, Qingqing Zhao*, Gordon Wetzstein, Jure Leskovec

ICLR 2023 notable top-25%

Best poster award at SUPR

[paper][project page][arXiv][poster][slides][tweet][code]

TL;DR: [ML for simulation] We introduced the first fully deep learning-based surrogate models for physical simulations that jointly learn forward prediction and optimizes computational cost with RL.

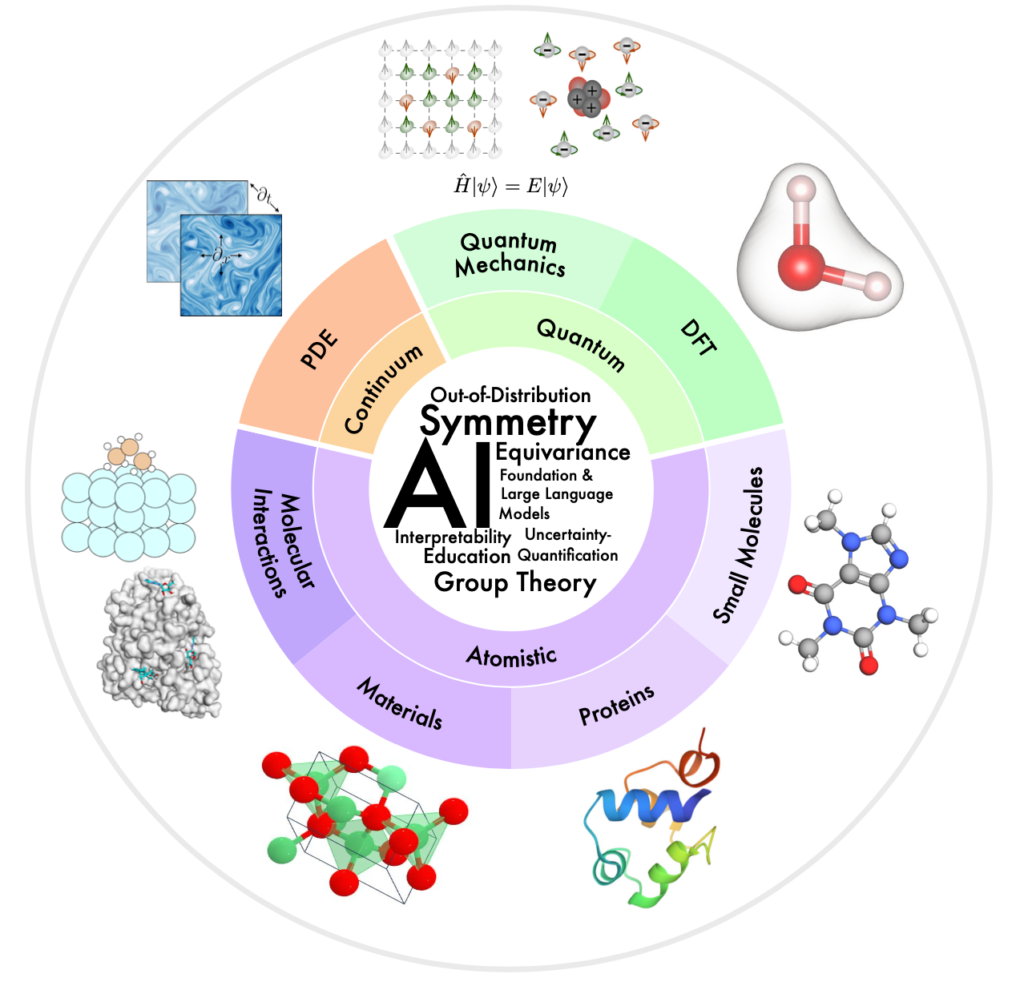

Artificial Intelligence for Science in Quantum, Atomistic, and Continuum Systems

Xuan Zhang, … Tailin Wu (31th/63 author), … Shuiwang Ji

[paper][website]

TL;DR: [ML for simulation] Review paper summarizing the key challenges, current frontiers, and open questions of AI4Science, for quantum, atomistic, and continuum systems.

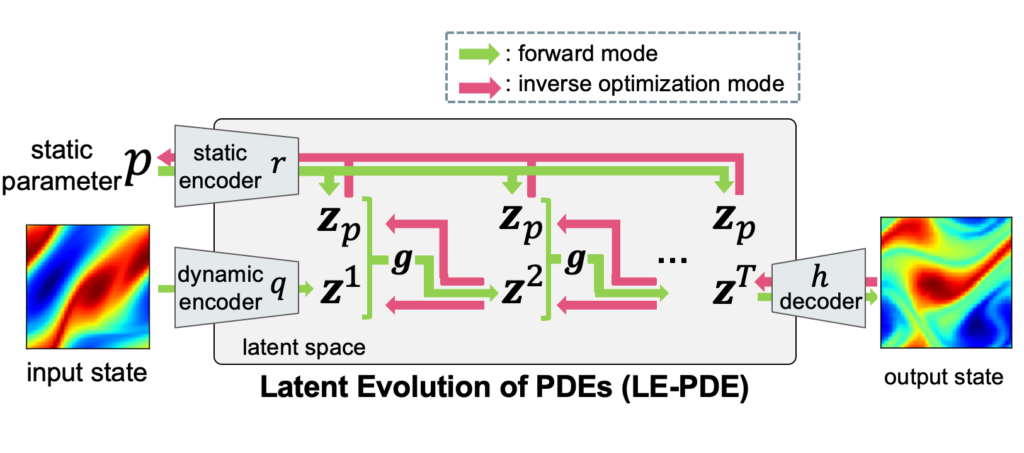

Learning to Accelerate Partial Differential Equations via Latent Global Evolution

Tailin Wu, Takashi Maruyama, Jure Leskovec

NeurIPS 2022

[project page][arXiv][paper][poster][code]

TL;DR: [ML for simulation] We introduced a method for accelerating forward simulation and inverse optimization of PDEs via latent global evolution, achieving up to 15x speedup while achieving competitive accuracy w.r.t. SOTA models.

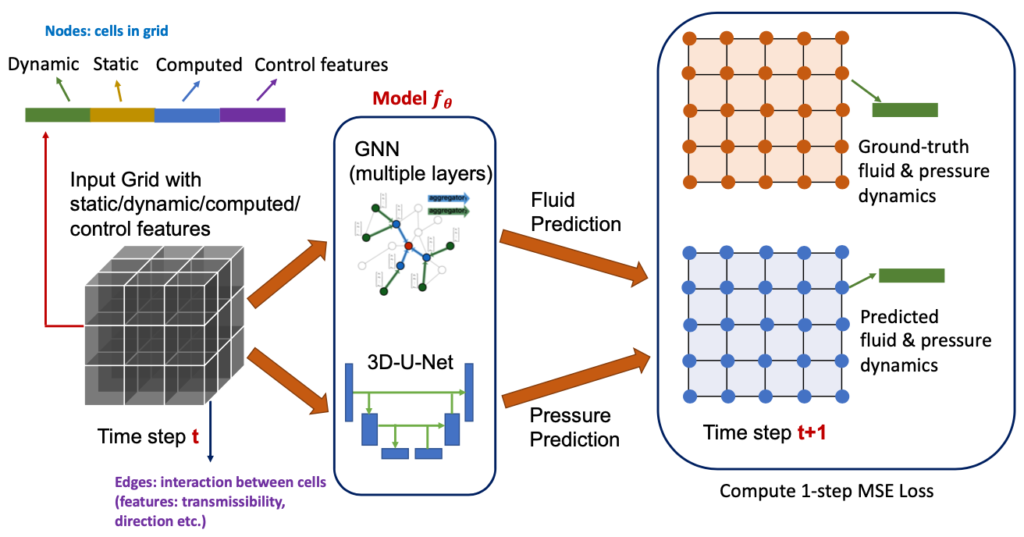

Learning Large-scale Subsurface Simulations with a Hybrid Graph Network Simulator

Tailin Wu, Qinchen Wang, Yinan Zhang, Rex Ying, Kaidi Cao, Rok Sosič, Ridwan Jalali, Hassan Hamam, Marko Maucec, Jure Leskovec

SIGKDD 2022 & ICLR AI for Earth and Space Sciences Workshop long contributed talk

[arXiv][project page][publication]

TL;DR: [ML for simulation] We introduced a hybrid GNN-based surrogate model for large-scale fluid simulation, with up to 18x speedup and scale to over 3D, 106 cells per time step (100x higher than prior models).

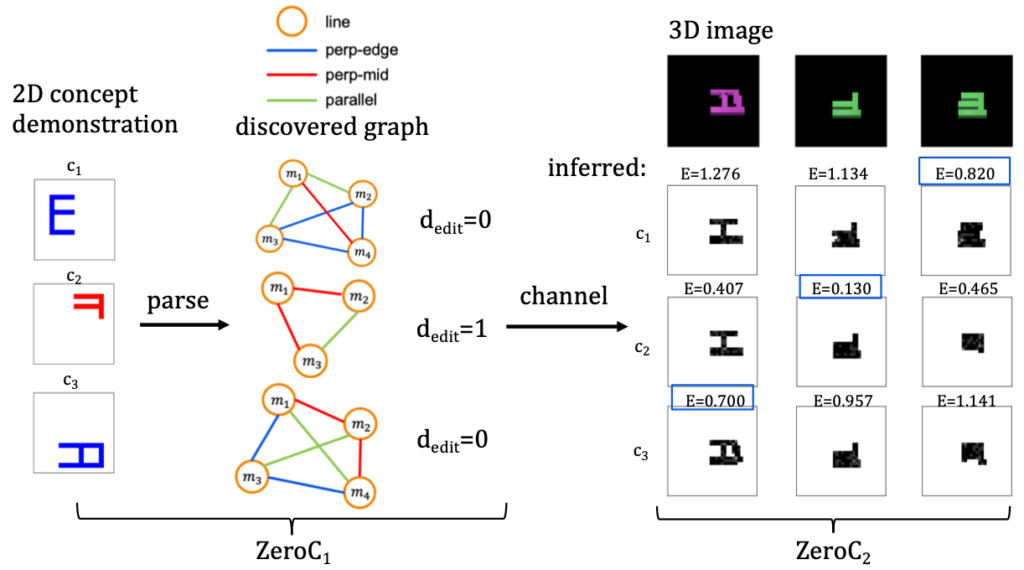

ZeroC: A Neuro-Symbolic Model for Zero-shot Concept Recognition and Acquisition at Inference Time

Tailin Wu, Megan Tjandrasuwita, Zhengxuan Wu, Xuelin Yang, Kevin Liu, Rok Sosič, Jure Leskovec

NeurIPS 2022

[arXiv][project page][poster][paper][slides][code]

TL;DR: [ML for discovery] We introduce a neuro-symbolic method that trained with simpler concepts and relations, can zero-shot generalize to more complex, hierarchical concepts, and transfer the knowledge across domains.

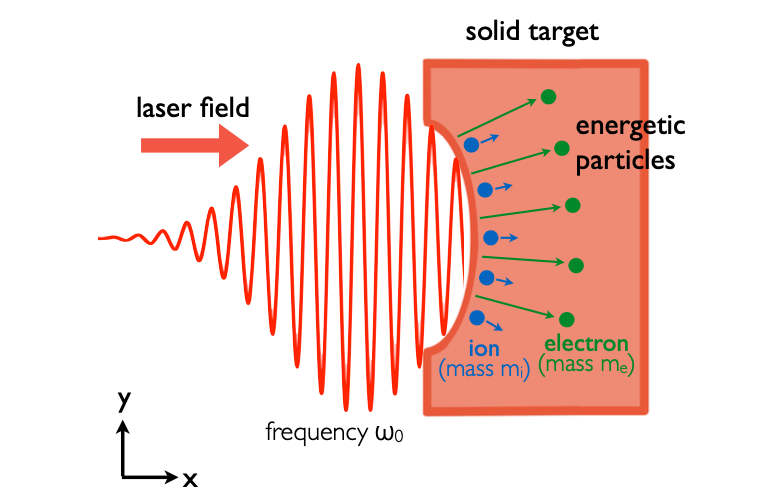

Learning Efficient Hybrid Particle-continuum Representations of Non-equilibrium N-body Systems

Tailin Wu, Michael Sun, H.G. Jason Chou, Pranay Reddy Samala, Sithipont Cholsaipant, Sophia Kivelson, Jacqueline Yau, Zhitao Ying, E. Paulo Alves, Jure Leskovec†, Frederico Fiuza†

NeurIPS 2022 AI4Science workshop [paper][poster]

TL;DR:[ML for simulation] We introduced a hybrid particle-continuum representation for simulation of multi-scale, non-equilibrium, N-body physical systems, speeding up laser-plasma simulation by 8-fold with 6.8-fold error reduction.

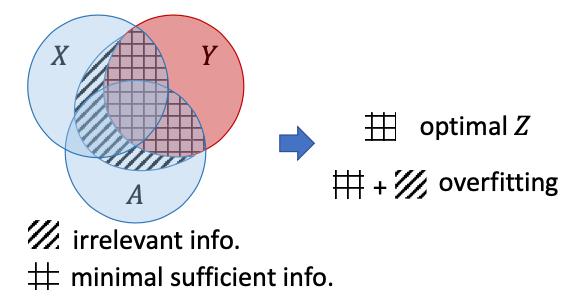

Graph Information Bottleneck

Tailin Wu*, Hongyu Ren*, Pan Li, Jure Leskovec

NeurIPS 2020

[arXiv][project page][poster][slides][code]

Featured in Synced AI Technology & Industry Review (机器之心) [news]

TL;DR: [representation learning] We introduced Graph Information Bottleneck, a principle and representation learning method for learning minimum sufficient information from graph-structured data, significantly improving GNN’s robustness to adversarial attacks and random noise.

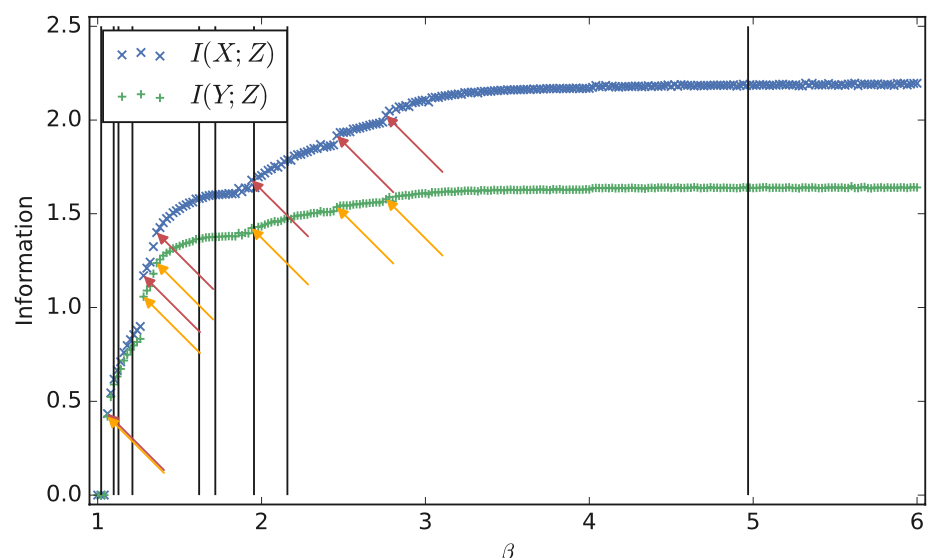

Phase transitions for the Information Bottleneck in representation learning

Tailin Wu, Ian Fischer

ICLR 2020

[paper][arXiv][video][NeurIPS ITML][poster]

TL;DR: [representation learning] We theoretically analyzed the Information Bottleneck objective, to understand and predict observed phase transitions (sudden jumps in accuracy) in the prediction vs. compression tradeoff.

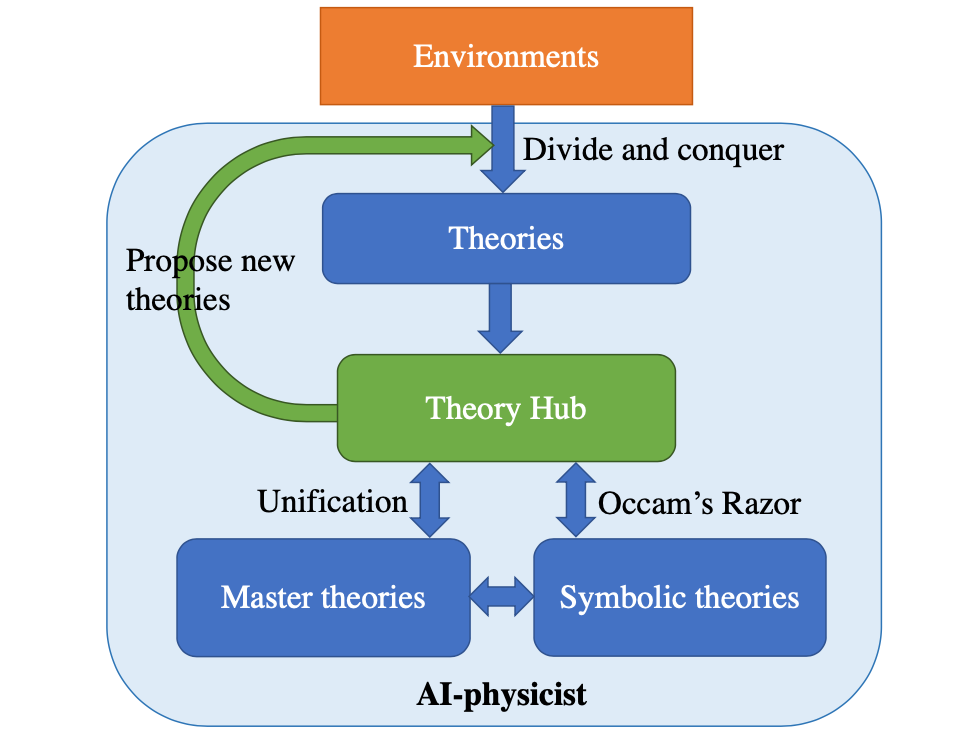

Toward an Artificial Intelligence Physicist for Unsupervised Learning

Tailin Wu, Max Tegmark

Physical Review E 100 (3), 033311 [journal article][arXiv][code]

Featured in MIT Technology Review and Motherboard

Featured in PRE Spotlight on Machine Learning in Physics

TL;DR: [ML for discovery] We introduced a paradigm and algorithms for learning theories (small, interpretable models together with domain classifier) each specializing in explaining aspects of a dynamical system. It combines four inductive biases from physicists: divide-and-conquer, Occam’s razor with MDL, unification and lifelong learning.

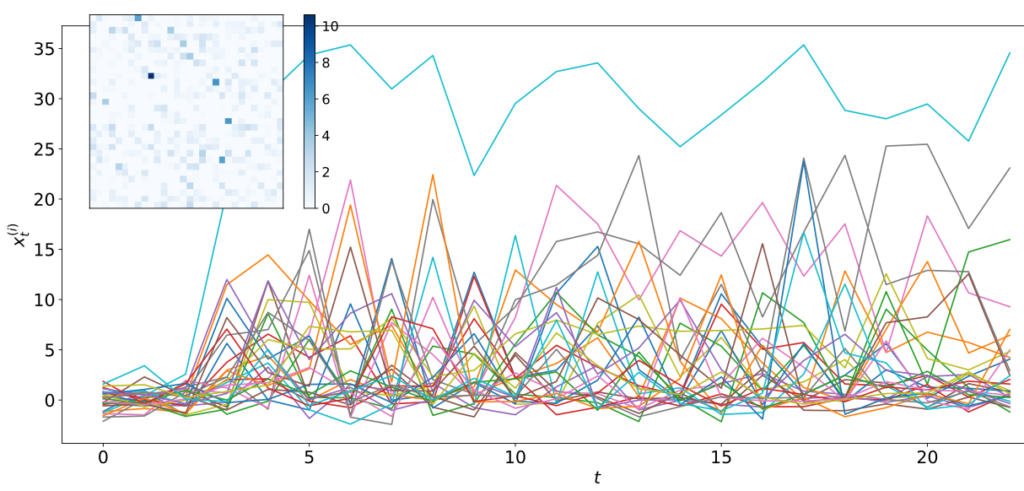

Discovering Nonlinear Relations with Minimum Predictive Information Regularization

Tailin Wu, Thomas Breuel, Michael Skuhersky, Jan Kautzin

Best Poster Award at ICML 2019 Time Series Workshop

[arXiv][ICML workshop][poster][code]

TL;DR: [ML for discovery] We introduced a method for inferring Granger causal relations for large-scale, nonlinear time series with only observational data.

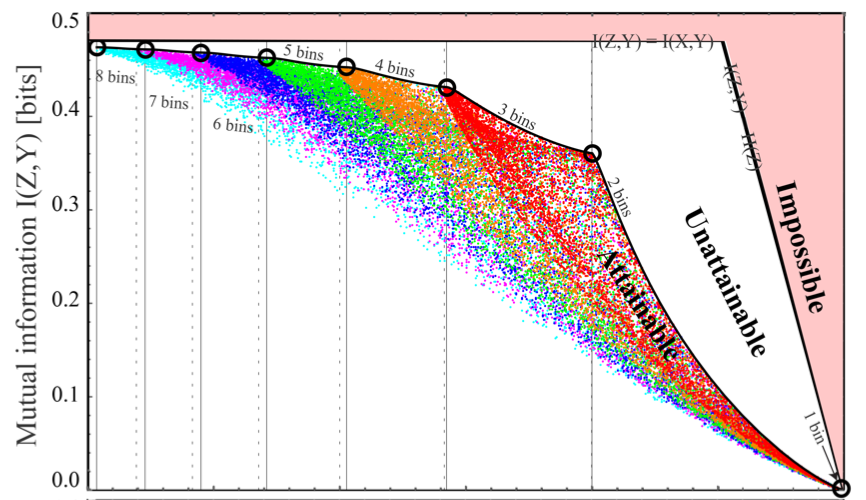

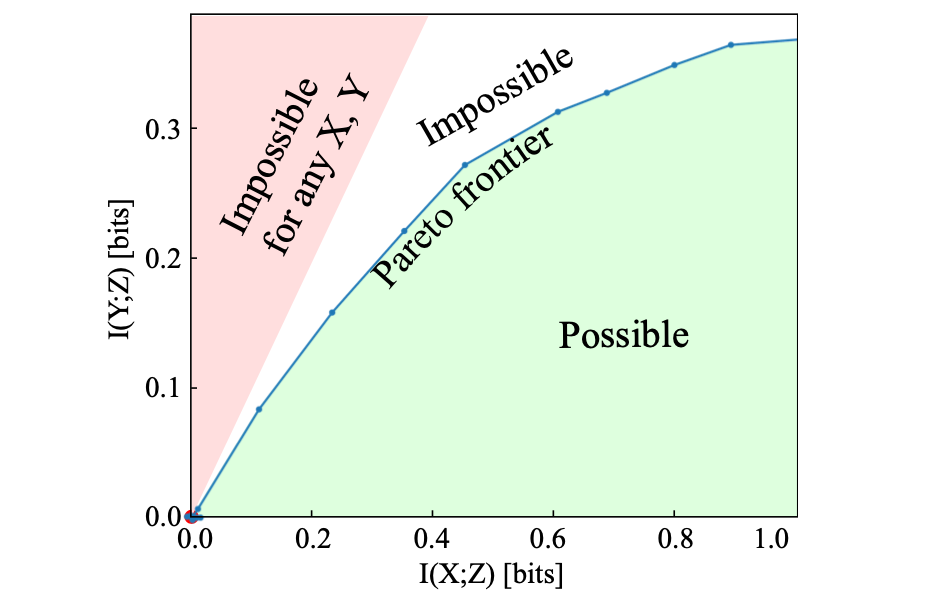

Pareto-optimal data compression for binary classification tasks

Max Tegmark, Tailin Wu

Entropy 2020, 22(1), 7, as cover issue. arXiv:1908.08961.

[Entropy][arXiv][code]

TL;DR: [representation learning] We introduce an algorithm for discovering the Pareto frontier of compression vs. prediction tradeoff in binary classification of neural networks.

Learnability for the Information Bottleneck

Tailin Wu, Ian Fischer, Isaac Chuang, Max Tegmark

UAI 2019, Tel Aviv, Israel [arXiv][pdf] [poster][slides]

Entropy 21(10), 924 (Extended version) [journal article]

ICLR 2019 Learning with Limited Data workshop as spotlight [openreview][poster]

TL;DR: [representation learning] We theoretically derive the condition of learnability in the compression vs. prediction tradeoff in the Information Bottleneck objective.

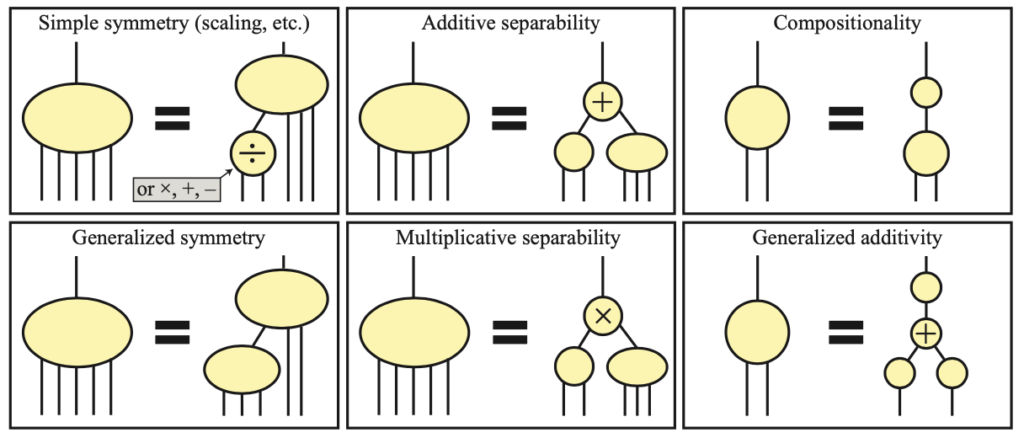

AI Feynman 2.0: Pareto-optimal symbolic regression exploiting graph modularity

Silviu-Marian Udrescu, Andrew Tan, Jiahai Feng, Orisvaldo Neto, Tailin Wu, Max Tegmark

NeurIPS 2020, Oral

[arXiv][code]

TL;DR: [ML for discovery] We introduce a state-of-the-art symbolic regression algorithm that robustly re-discovering top 100 physics equations from noisy data from Feynman lectures.

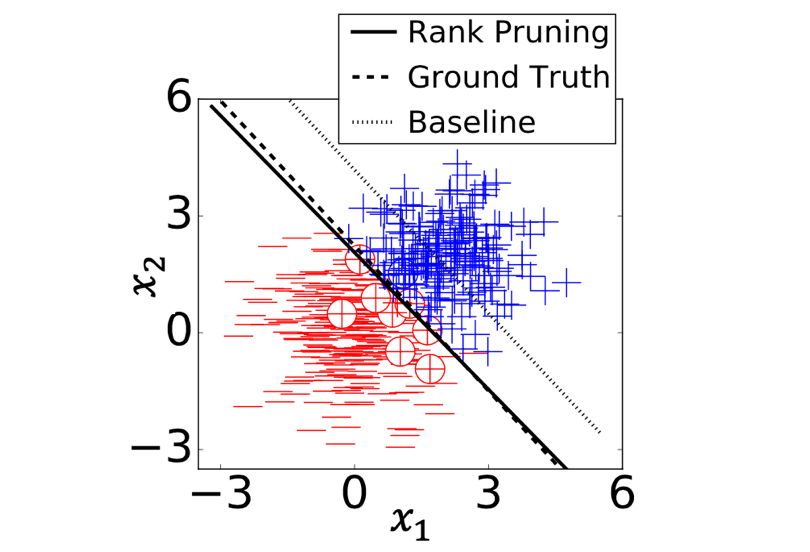

Learning with Confident Examples: Rank Pruning for Robust Classification with Noisy Labels

Curtis G. Northcutt*, Tailin Wu*, Isaac Chuang

UAI 2017

[arXiv][code]

Cleanlab is built on top of it.

TL;DR: [representation learning] We introduce a rank pruning method for classification with noisy labels, which provably obtains similar performance as without label noise.

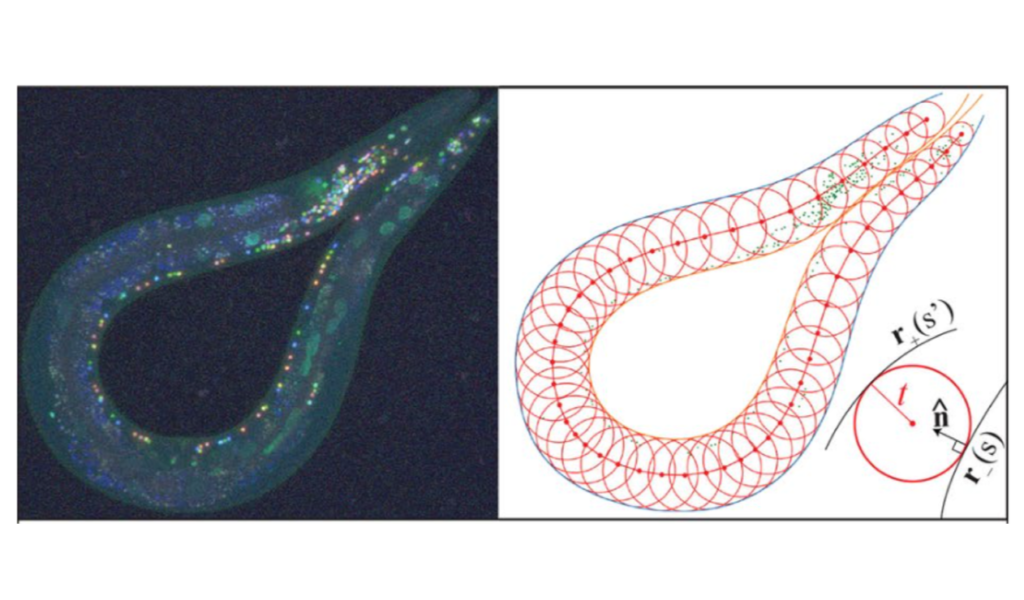

Toward a more accurate 3D atlas of C. elegans neurons

Michael Skuhersky, Tailin Wu, Eviatar Yemini, Amin Nejatbakhsh, Edward Boyden, Max Tegmark

BMC bioinformatics 23 (1), 1-18 [paper]

TL;DR: [ML for discovery] We introduce a method for identifying the neuron ID in C. elegans and introduced a more accurate neuron atlas with the NeuroPAL technique.

Preventing and Reversing Vacuum-Induced Optical Losses in High-Finesse Tantalum (V) Oxide Mirror Coatings

Dorian Gangloff*, Molu Shi*, Tailin Wu*, Alexei Bylinskii, Boris Braverman, Michael Gutierrez, Rosanna Nichols, Junru Li, Kai Aichholz, Marko Cetina, Leon Karpa, Branislav Jelenković, Isaac Chuang, and Vladan Vuletić

Optics Express 23(14). (2016) [paper]

Iterative Precision Measurement of Branching Ratios Applied to 5P states in 88Sr+

Helena Zhang, Michael Gutierrez, Guang Hao Low, Richard Rines, Jules Stuart, Tailin Wu, Isaac Chuang

New Journal of Physics, 18(12):123021, (2016) [paper]

Pathway-based mean-field model for Escherichia coli chemotaxis

Guangwei Si, Tailin Wu, Qi Ouyang, and Yuhai Tu

Physical Review Letters, 109, 048101 (2012) [paper]

Frequency-Dependent Escherichia coli Chemotaxis Behavior

Xuejun Zhu, Guangwei Si, Nianpei Deng, Qi Ouyang, Tailin Wu, Zhuoran He, Lili Jiang, Chunxiong Luo, and Yuhai Tu

Physical Review Letters. 108, 128101 (2012) [paper]

Teaching:

- 2025 Spring: AI + Science (for undergraduate students)

- 2024/2025 Spring: Frontiers in Computer Science and Technology (for PhD students)

- Course website: ai4s.lab.westlake.edu.cn/course

- Course interactive notebooks: github.com/AI4Science-WestlakeU/frontiers_in_AI_course

- Guest Lecturer, AI Physicist and Machine Learning for Simulations, for Caltech CS159: Advanced Topics in Machine Learning, April, 2022.

- Teaching Assistant, MIT 8.01: Classical Mechanics, responsible developing part of the online interactive sessions for providing additional materials and automatic grading, and in-person tutoring, September to December, 2016.

- Teaching Assistant, MIT 8.02: Electricity and Magnetism, responsible developing part of the online interactive sessions for providing additional materials and automatic grading, and in-person tutoring, February to May, 2017.

Invited talks:

Featured talks:

[2023/03] AI + Science: motivation, advances and open problems, at Swarma (集智) [slides][summary]

[2023/05] Graph Neural Networks for Large-scale Scientific Simulations, at 首届人工智能科学计算研讨会

[2023/03] Steps toward an AI scientist: neuro-symbolic models for concept generalization and theory learning, at AAAI 2023 Symposium of Computational Approaches to Scientific Discovery [slides]

[2023/02] Learning structured representations for accelerating scientific discovery and simulation, at Yale Department of Statistics and Data Science

[2022/06] Learning to Accelerate Large-scale Physical Simulations in Fluid and Plasma Physics, at the Data-driven Physical Simulations (DDPS) seminar at Lawrence Livermore National Laboratory [video]

[2022/04] Guest lecture at Caltech CS159, AI Physicist and Machine Learning for Simulations [slides]

Other invited talks:

[2025/04] 生成模型用于复杂系统的设计与控制以及test-time scaling,at 北大前沿探索者沙龙

[2024/12] AI+科学和工程:从2025到2035,at 浦江AI学术年会

[2024/10] 基于扩散生成模型的复杂系统设计和控制, at CNCC 2024

[2024/10] Learning adaptive and compositional networks for multi-resolution simulation and inverse design, at IMS-NTU joint workshop on Applied Geometry for Data Sciences, Part I

[2024/07] Designing and controlling complex systems via diffusion generative models, at the 10th Shanghai International Symposium on Nonlinear Sciences and Applications

[2024/04] Envisioning AI + Science in 2030, at 2050 Yunqi Forum

[2024/03] Uncertainty Quantification for Forward and Inverse Problems of PDEs via Latent Global Evolution, at SIAM Conference on Uncertainty Quantification (UQ24)

[2024/02] Compositional generative inverse design, at AI4Science Talks [slides]

[2024/01] Machine learning for accelerating scientific simulation, design and control, at Annual Meeting of Peking University Center for Quantitative Biology

[2023/11] GNN for scientific simulations: towards adaptive multi-resolution simulators and a foundation neural operator, at LoG Shanghai Meetup

[2023/08] Machine learning of structured representations for accelerating scientific discovery and simulation, invited talk at CPS Fall Meeting(中国物理学会2023秋季会议)

[2023/08] Graph neural network for accelerating scientific simulation, at 2023科学智能峰会

[2023/08] Phase transitions in the universal tradeoff between accuracy and simplicity in machine learning, at HK Satellite of StatPhys28

[2023/07] 走向AI物理学家,做科学发现和仿真, at 果壳未来光锥

[2023/06] Machine learning of structured representations for accelerating scientific discovery and simulation, at 清华大学

[2023/06] Learning structured representations for accelerating simulation and design, at School of Intelligence Science and Technology, at 北京大学

[2023/05] Steps toward an AI scientist: neuro-symbolic models for concept generalization and theory learning, at Brown University Autonomous Empirical Research group.

[2023/04] AI for scientific design: Surrogate model + backpropagation method, at Swarma [slides]

[2023/04] Learning Controllable Adaptive Simulation for Multi-resolution Physics, at Stanford Data for Sustainability Conference 2023 [slides]

[2023/03] Learning Controllable Adaptive Simulation for Multi-resolution Physics, at TechBeat [slides][video]

[2023/02] Graph Neural Networks for Large-scale Simulations, at Stanford HAI Climate-Centred Student Affinity Group [slides]

[2023/02] ZeroC: A Neuro-Symbolic Model for Zero-shot Concept Recognition and Acquisition at Inference Time, at AI Time [slides]

[2022/12] Steps toward an AI scientist: neuro-symbolic models for zero-shot learning of concepts and theories, at BIGAI

[2022/09] Learning to Accelerate Large-Scale Physical Simulations in Fluid and Plasma Physics, at SIAM 2022 Conference on Mathematics of Data Science

[2022/06] Learning to accelerate simulation and inverse optimization of PDEs via latent global evolution, Stanford CS ML lunch

[2021/12] Machine learning of physics theories, at Alan Turing Institute, Machine Learning and Dynamical Systems Seminar [video]

[2021/10] Graph Information Bottleneck, at Beijing Academy of Artificial Intelligence (BAAI)

[2021/06] Machine learning of physics theories and its universal tradeoff between accuracy and simplicity, at Workshop on Artificial Scientific Discovery 2021 [slides][video]

[2021/04] Machine Learning of Physics Theories, at Workshop on Artificial Scientific Discovery 2021, Summer school hosted by Max Planck Institute for the Science of Light [slides][video]

[2021/04] Phase transitions on the tradeoff between prediction and compression in machine learning, Stanford CS ML lunch

[2021/03] Learning to accelerate the simulation of PDEs, at CLARIPHY Topical Meetings [video]

[2021/02] Machine Learning of Physics Theories, at Seminar Series of SJTU Institute of Science [video]

[2021/02] Phase transitions on the universal tradeoff between prediction and compression in machine learning, at Math Machine Learning seminar MPI MIS + UCLA [video]

[2020/10] Machine learning of physics theories and its universal tradeoff between accuracy and simplicity, at Los Alamos National Lab

[2020/02] Phase transitions for the information bottleneck, UIUC

Reviewers:

- Area chair for ICML 2026, ICLR 2026, NeurIPS 2025

- Proceedings of the National Academy of Sciences (PNAS)

- Nature Machine Intelligence

- Nature Communications

- Nature Scientific Reports

- Science Advances

- Patterns (by Cell Press)

- iScience (by Cell Press)

- PLoS Computational Biology

- NeurIPS 2024, NeurIPS 2023, NeurIPS 2022, NeurIPS 2021, NeurIPS 2020

- ICML 2024, ICML 2023, ICML 2022, ICML 2021, ICML 2020

- ICLR 2024, ICLR 2023, ICLR 2022

- SIGKDD 2023, SIGKDD 2022

- SIAM 2024 International Conference on Data Mining (SDM’24)

- Learning on Graph Conference

- AAAI 2025

- TMLR

- Chinese Physics Letters, Chinese Physics B (Young Scientist Committee)

- IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Transactions on Image Processing (TIP)

- IEEE Transactions on Audio, Speech and Language Processing (T-ASL)

- IEEE Transactions on Network Science and Engineering

- IEEE Signal Processing Letters

- IEEE International Symposium on Information Theory (ISIT)

- Neural Computation

- Physica D

- Journal of Petroleum Science and Engineering

Education:

- 2020-2023.05: Stanford University

Postdoctoral scholar in Computer Science Department - 2012-2019: Massachusetts Institute of Technology

Ph.D. in Department of Physics - 2008-2012: Peking University(北京大学)

Bachelor of Science in Physics

Experience:

- Summer 2019: Google AI, Mountain View

Research intern with Ian Fischer and Alex Alemi - Summer 2018: NVIDIA research, Santa Clara

Research intern with Thomas Breuel and Jan Kautz - 2015-2016: Co-President, MIT CSSA

Service:

- Lead organizer and program committee, ICLR 2021 Deep Learning for Simulation Workshop (simDL), 2021.

- Co-President, MIT Chinese Student and Scholar Association (MIT CSSA), 2015-2016.

- Executive board member, MIT Chinese Student and Scholar Association (MIT CSSA), 2014-2016

- Mentor, Stanford CS undergraduate mentoring program for students from underrepresented backgrounds, 2021.

- Mentor, Stanford summer undergraduate research program (CURIS).

Selected open-source projects:

- CinDM (ICLR 2024 spotlight): https://github.com/AI4Science-WestlakeU/cindm

- LAMP (ICLR 2023 spotlight): https://github.com/snap-stanford/lamp

- LE-PDE (NeurIPS 2022): https://github.com/snap-stanford/le_pde

- AI Physicist (Physical Review E): https://github.com/tailintalent/AI_physicist

- GIB (NeurIPS 2020): https://github.com/snap-stanford/GIB

- ZeroC (NeurIPS 2022): https://github.com/snap-stanford/zeroc

- Causal Learning (best poster award, TimeSeries@ICML 2019 ): https://github.com/tailintalent/causal

- Pytorch_net Library: https://github.com/tailintalent/pytorch_net

Alumni (selected from directly supervised students):

- Megan Tjandrasuwita, summer intern, 2021 → Ph.D. student at MIT EECS

- Zhengxuan Wu, research assistant, 2020-2021 → Ph.D. student at Stanford Computer Science

- Xuelin Yang, research assistant, 2020-2021 → Ph.D. student at UC Berkeley Statistics

- Qingqing Zhao, 2022, Ph.D. student collaborator @ Stanford Electrical Engineering

- Qinchen Wang, research assistant, 2021-2022, master @ Stanford Computer Science

- Pranay Reddy Samala, research assistant, 2021-2022 Spring → Software Engineer @ Waymo

- Takashi Maruyama, 2022-2023, visiting scholar @ NEC

- Haixin Wang, intern, 2023-2024 → Ph.D. student at UCLA CS

My favorites:

- Future:

- AGE OF BEYOND: If Humans & AI United: This is humanity’s future we should all strive for.

- Video:

- Timelapse of the future: A Journey to the End of Time: The vastness of the Universe does not lie in its space, but its time. This video is utterly beautiful, exhilarating, and soothing, making us rethink time, and what we want to do in the blink of time the Universe has given us.

- Sci-fi show:

- The Expanse: A realistic, amazing sci-fi show depicting the solar system three hundred years from now, where humans have colonized Earth, Mars and the Belt. The story began when they discover proto-molecules from an alien civilization, and a mystery is gradually revealed. Each season expands to a broader scope, finally to a galaxy-spanning human empire.

- Sci-fi novel:

- A Fire upon the Deep: A fantastic novel involving multiple levels of super-intelligence, and how some individuals’ actions fundamentally change the future of the galaxy.